Dogfighter UAV

As an undergraduate project, our team has designed and developed a dogfighting UAV system that is capable of identifying and tracking rival UAVs in the air. The main objective of this system is to provide a cost-effective solution for UAV surveillance and defense. The system relies on data analysis and guidance algorithms to select a target, approach it for visual contact, and pursue it with visual guidance.

Keywords

fixed-wing UAV design; target optimization; autonomous dogfight; UAV tracking; visual guidance

Abstract

In this project, I was responsible for the development of whole image processing pipeline including camera, and onboard embedded computer selection, development of a hybrid algorithm that fuses object tracking and detection algorithms, especially the tracking part, and deployment of these algorithms to embedded computer.

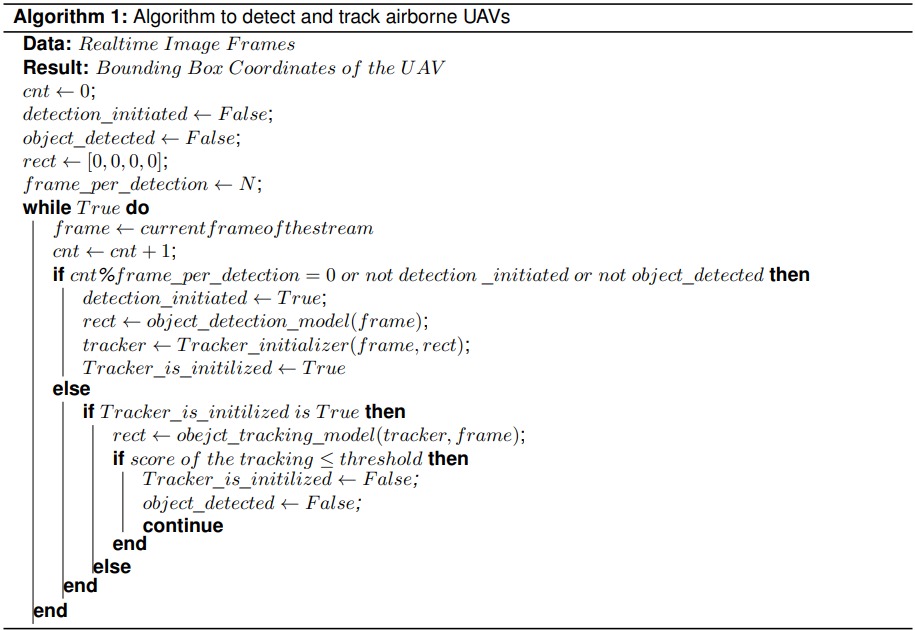

For real time guidance, image processing algorithms are required so that the rival UAV would be located on the onboard camera with respect to our UAV. Image processing algorithm consists of two parts, object detection and object tracking respectively. Once the rival UAV is detected and localized with an object detection algorithm, its output is fed into the object tracking algorithm. Then, the object tracking algorithm tracks the rival UAV until N frame passes if the tracking algorithm work in an confidence interval. Object detection and object tracking algorithms work successively in a way that they enhance the deficiency of each other as in the figure below and for each frame, error vector corresponding to the distance between centre of the bounding box and the centre of the image, is fed to the controller. Benchmark tests of multiple state of art algorithms are investigated during the literature research to find most appropriate algorithms for our case.

The composition of detection and tracking algorithms allows us to perform image processing tasks with an higher accuracy on an onboard computer in for an airborne guidance system, which is essential for an agile system, because tracking algorithms mostly outperform detection algorithms in illumination, fast motion and small object related problems and detection algorithms eliminates the drifting problem of the tracking algorithms. Therefore, continuous tracking is achieved by setting initial state by the detection algorithm and the object is tracked in the following N frames if the tracking performance is better than a threshold value. Moreover, as a design parameter, the image processing algorithm architecture is designed to be run on an onboard computer because real-time control with low latency can be achieved and a more agile system can be obtained in this way. Also, a computer on a ground control station is considered to process the image, but this option comes with delays during data transfer between Ground Control Station(GCS) and the UAV. Therefore, image processing algorithms that can run on onboard embedded computers are researched.

The code for the image processing part of this project can be found in this repo. Also the Dogfighter UAV and the demo of the image-processing algorithm can be found below: